Key takeaways:

- A/B testing helps you make decisions with data and avoid relying on guesswork.

- It works best when you test one change at a time and give it enough traffic and time to show results.

- The right tools and practices keep your tests reliable, user-friendly, and safe for SEO.

Every business runs into roadblocks. Sales don’t grow as expected, carts get abandoned, or campaigns don’t deliver.

Instead of guessing what went wrong, A/B testing gives you a simple way to find out. It works by showing two versions of the same element to different visitors.

According to recent statistic, 60% of companies say it’s one of the most valuable methods for improving conversion rates.

Let’s break down what A/B testing is, when it’s worth running, how to set up a proper test, the tools that make it easier, real-world examples of tests that work, and common mistakes to avoid.

What is A/B testing?

A/B testing, sometimes called split testing, is the practice of comparing two versions of something to see which performs better. Version A is the control, or the original, while Version B is the variation, or the new version you want to try.

Visitors are randomly shown one of the two versions, and their actions are tracked to find out which version helps you reach your goal more effectively.

How to run an A/B testing

If you’re a startup or small business, running an A/B test can feel overwhelming at first, but the process is simpler. Here’s how the process typically works:

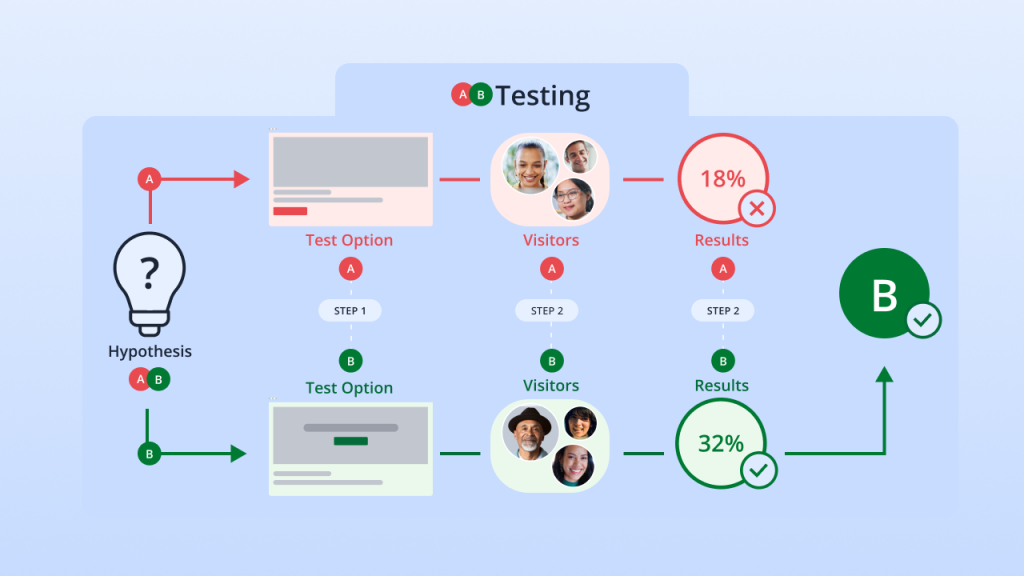

- Form a hypothesis. Decide what you want to change and why. A strong hypothesis keeps your test focused. For instance: “Changing the button text from ‘Submit’ to ‘Book Now’ will increase form completions.”

- Create two versions. Version A is your current design, while Version B is the change you want to test. Keeping everything else the same makes the results accurate.

- Split your audience. Half of your visitors see Version A; the other half see Version B. Random assignment avoids bias and gives you a fair comparison.

- Measure performance. Track results, like clicks, sign-ups, or sales. Define your primary success metrics clearly so you know exactly how to measure progress toward your business goal.

- Analyze results. Review the data to see which version performed better. This step prevents decisions based on gut feeling.

- Apply the winner. Roll out the stronger version to all visitors and lock in the improvement.

For example, if a local bakery wants to increase online orders, they might test two versions of their Order Now button: one green and one orange. If the orange button gets more clicks and orders, they know it’s the better choice to keep live.

Why do businesses need A/B testing?

Many small business owners ask themselves, “Do I need A/B testing?” The quick answer is yes, if you want to stop guessing what works and what doesn’t. Here are reasons why businesses should use A/B testing:

- Replaces opinions with data

- Improves the user experience

- Increases conversion rates

- Lowers bounce rates

- Gets more value from your traffic

- Reveals what your audience really wants

- Reduces the risk of major changes

- Builds a habit of continuous improvement

Replaces opinions with data

Without testing, most business decisions are based on intuition or team debates. A/B testing provides measurable evidence of what works.

For example, instead of arguing over whether “Save 20% Today” or “Shop Smart and Save” is the better headline, you can test both with real visitors. The outcome makes your choice obvious and eliminates the uncertainty.

Improves the user experience

User experience issues aren’t always visible. The design might look good, but it still frustrates visitors.

For instance, a form with five required fields may drive people away, while a shorter version with just three fields could double completions.

Small changes like these build smoother, more enjoyable experiences that keep customers engaged.

Increases conversion rates

Even a small increase in conversion rates can lead to major revenue growth. For example, an online clothing store where 2% of visitors make a purchase. By testing a simplified checkout process, the store increases its conversion rate to 2.5%. That 0.5% difference equals hundreds of additional sales over time.

If you want to know more about improving conversions, we have a full guide on how to improve conversion rates, and the methods businesses use to achieve them.

Lowers bounce rates

When visitors leave after viewing only one page, something isn’t working. Maybe the design feels off, or the content doesn’t match their expectations. A/B testing shows you which adjustments encourage people to stay longer.

For example, an online publisher might compare two article layouts: one with long blocks of plain text and another with visuals and clear subheadings. The version with visuals often keeps readers engaged longer and reduces bounce rates.

If you want your site visitors to stay longer, check out our article guide on how to reduce bounce rates on your website.

Gets more value from your traffic

Acquiring new visitors is costly. But A/B testing helps you maximize the visitors you already have by improving the effectiveness of your existing pages and campaigns.

A local service provider, for instance, might test two versions of its service page. If one version delivers 30% more inquiries, that’s a better return on the same ad spend.

Reveals what your audience really wants

Over time, A/B testing highlights preferences across different groups of users. You might find new visitors respond better to detailed product descriptions, while returning customers prefer faster checkout options.

These insights allow you to adapt your strategies to match audience needs and build stronger customer loyalty.

Reduces the risk of major changes

Rolling out large, untested changes can backfire. A/B testing reduces this risk by experimenting with smaller adjustments first.

Instead of redesigning your entire homepage, you might start by testing a new hero banner. If it improves engagement, you can roll it out across more pages with confidence.

Builds a habit of continuous improvement

Customer habits change all the time. What works today might not work a few months from now. A/B testing helps you keep up by making small improvements on a regular basis. Over time, those small wins add up and help your business grow without losing momentum.

If you want to track engagement, tools like Google Analytics or SEMrush help you show page views, bounce rates, time on page, and conversions. You can see where people lose interest and where they stay engaged, which helps you decide what to try next.

When you should run an A/B test

A/B testing is not just about testing everything; it’s about testing the right things at the right time. Here are the situations where running a test makes sense:

- When you have an idea but no proof. If you think a new headline, button, or layout might work better, A/B testing shows you if you’re right.

- When a change feels risky. Before rolling out a big update, test it on part of your audience to see how it performs.

- When you want steady improvements. Testing one small change at a time helps you keep making your site or app better.

- When you want to improve user experience. Even small tweaks, like moving a button or shortening a form, can make things easier for customers.

- When running marketing campaigns. You can test subject lines, ads, or landing pages to see which version gets more clicks or sales.

- When something isn’t working. If a page, form, or feature underperforms, testing helps you figure out what change could fix it.

- When launching something new. A/B testing a new product page or feature before a full release shows how people respond.

Here’s a quick self-check:

- Do you get enough visitors or users each week?

- Do you know the one goal you want to improve (sales, sign-ups, clicks)?

- Can you create at least one alternative version to test?

- Are you only changing one thing at a time?

If you can say yes to these, you’re ready to run an A/B test.

When you shouldn’t run an A/B test

A/B testing isn’t always the right approach. There are times when running a test can waste resources or lead to misleading results. Here are the main situations where an A/B testing is unnecessary:

- You don’t have enough traffic. A/B tests need a steady flow of visitors and conversions to show differences. If your site gets fewer than 1,000 visitors a month or a few conversions, the test may take too long or give results you can’t rely on.

- The cost outweighs the benefit. If the time, tools, or resources needed to run a test are greater than the improvement, it’s not worth pursuing.

- You’re fixing technical issues. Bugs, broken buttons, or slow load times don’t need a test, they need a direct fix.

- You’re testing too many elements at once. A/B testing works best when you change one thing at a time. If you change multiple things together, you won’t know which one caused the result.

- You don’t have a clear hypothesis. A test without a clear problem, proposed change, and expected outcome will leave you with meaningless results.

- You’re tracking vanity metrics. If the metric doesn’t connect to a business goal like sales, leads, or engagement, the test won’t provide useful insights.

- It’s a holiday or special event period. User behavior isn’t typical during holidays, so tests run at these times can produce skewed or unreliable results.

If you find yourself in one of these situations, focus on fixing the basics or gathering more traffic before testing. A/B testing is powerful, but only when the conditions are right.

A/B testing examples

Seeing A/B testing in action makes it easier to understand how small changes can create meaningful results. Here are some of the most common areas where businesses run tests:

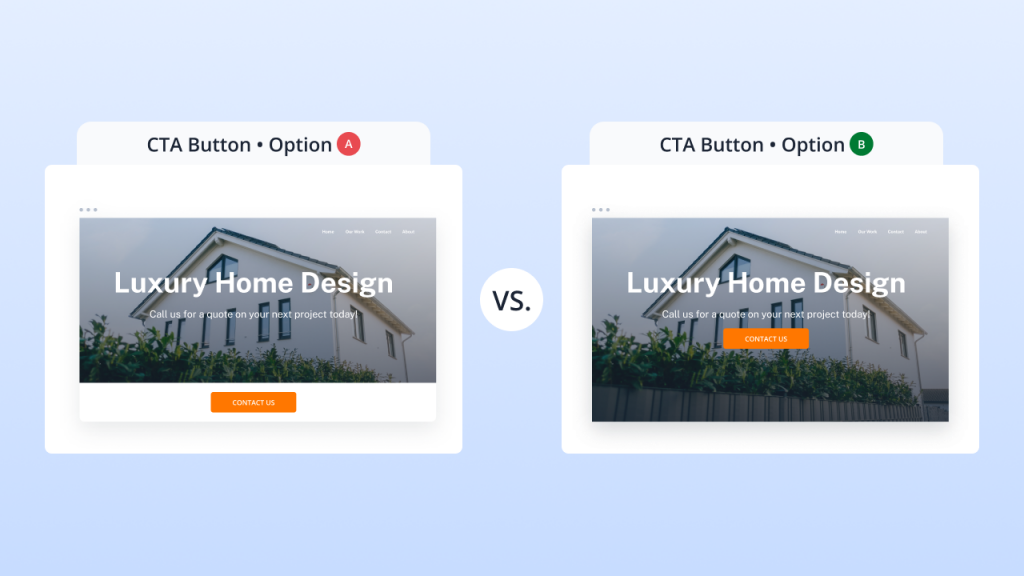

CTA placement

One of the most common web page tests is CTA placement. In one version, the button might sit at the bottom of the hero image, while in another it’s placed prominently at the center.

The difference may seem small, but the right placement can catch attention faster and encourage more visitors to click through.

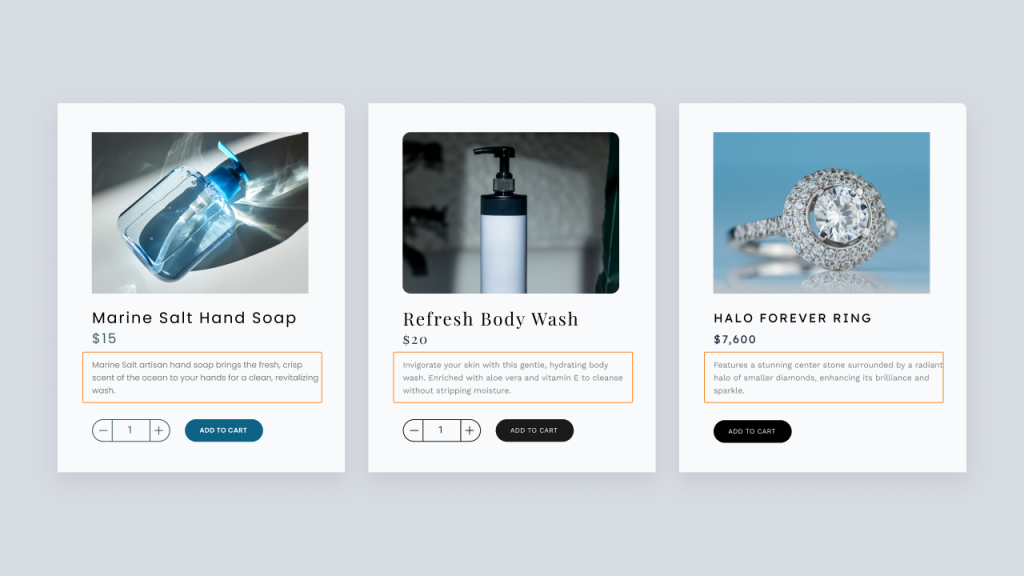

Product description length

A shorter description of two or three lines might appeal to shoppers who want quick highlights, while a longer version with detailed specs and benefits can reassure buyers who need more information before making a decision. Testing both helps you discover which approach convinces more people to purchase.

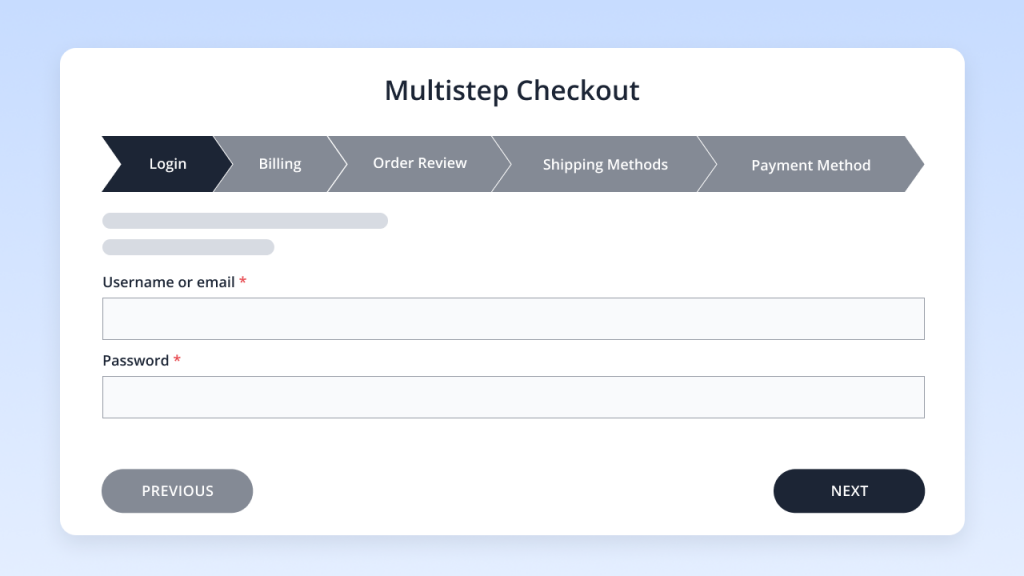

One-page vs. multi-step checkout

Checkout is a critical point where many businesses lose customers. One common test compares a single-page checkout with a multi-step process. While a one-page layout makes everything visible at once, a step-by-step flow breaks the process into smaller tasks. Testing helps reveal which approach leads to fewer abandoned carts and more completed orders.

Which tools are used for A/B testing?

There are many tools used for testing, each offering features depending on how simple or advanced your needs are. Here are some of the most popular A/B testing tools:

- Visual web optimizer

- Crazy Egg

- Optimizely

- Omniconvert

- UsabilityHub

Visual Web Optimizer (VWO)

VWO lets you set up tests without having to edit your site’s code. Its visual editor allows you to change headlines, swap images, or adjust button text with a few clicks. It also lets businesses test features across devices or within the checkout flow.

Crazy Egg

Crazy Egg works by adding a small code snippet to your site. From there, you can test different versions of a page and watch the results in real time.

It also provides heat maps and session recordings, which show you where people click and how they move through your site. These extra insights help you understand not only which version performs better, but also why.

Optimizely

Optimizely helps you test multiple variations of a page and show different versions to visitors depending on things like where they’re located or which device they’re using.

For companies that need to run tests across websites, apps, and other digital channels, it also provides tools to manage experiments in one place.

Some platforms also support split URL testing, which compares entirely different web pages against each other instead of small changes on the same page. This is useful when testing new layouts or complete redesigns

Omniconvert

Omniconvert allows you to run tests by editing HTML, CSS, or JavaScript, and it can use real-time data like cart value or customer location to create variations.

This makes it useful for businesses that want to run more customized experiments beyond simple page tweaks.

UsabilityHub

UsabilityHub isn’t a traditional A/B testing platform but focuses on user research. It lets you run tasks like comparing two design versions, checking how people navigate a page, or measuring first impressions.

You can invite your own customers to participate or recruit testers from their large panel. While it won’t split traffic like standard A/B tools, it’s helpful for gathering early feedback before running a full test.

Common mistakes and how to avoid them

Rushing through the testing process can sometimes lead to misleading results and wasted effort. Here are the most common mistakes businesses make and should be avoided:

- Ending tests too early

- Testing too many things at once

- Ignoring mobile users

- Forgetting SEO risks

Ending tests too early

A common mistake is stopping a test as soon as one version starts to show results. Early numbers can be misleading.

Maybe Version B looks like it’s winning after 100 visits, but once you reach 1,000, the difference disappears. Ending too early means you risk making decisions on incomplete data.

How to avoid it

Run your test for at least 2–4 weeks. Smaller sites need that time to gather enough data, while bigger sites may see results sooner. Ending too early can make you think a change works when it really doesn’t.

You can also use an A/B test calculator to check if you’ve collected enough data before stopping. Aim for statistical significance so you know the winning version isn’t just a random spike but a real improvement.

Testing too many things at once

A/B testing works by isolating one variable. If you change three things at once, like a new headline, a different image, and a new button color, you won’t know which change made the impact.

The result may look good, but it won’t tell you what’s actually responsible for the improvement.

How to avoid it

If you want to test multiple changes together, consider multivariate testing instead. Unlike A/B testing, it shows how combinations of elements impact performance, but it requires more traffic and complexity to run properly.

Ignoring mobile users

Many businesses design and test for desktops first, but mobile users often make up the majority of traffic.

If you only look at desktop results, you could think a variation is a winner, while on mobile, it performs worse. Ignoring mobile testing risks pushing changes that frustrate a big part of your audience.

How to avoid it

Always check results in both desktop and mobile. Design your variations to be responsive and review the data separately for each device type. What works on a laptop might not work on a phone, so don’t assume the results are the same everywhere.

Forgetting SEO risks

A/B testing can sometimes cause problems for search rankings if not set up properly. For example, if Google sees two versions of the same page without clear instructions, it might treat them as duplicate content.

Using the wrong type of redirect or leaving test pages live too long can also hurt your rankings.

SEO-safe testing checklist:

- Use 302 redirects for test pages. A 302 is a type of redirect that tells Google a change is temporary. This way, the test page won’t replace your original page in search results.

- Add rel=canonical tags. This is a small line of code that tells Google, “This test page is a copy — treat the original page as the main one.” It prevents duplicate content issues.

- Keep tests short-term. Once you know which version works best, end the test and keep the winner. Leaving test versions running for months can confuse search engines.

- Avoid cloaking. Cloaking means showing one version to search engines and a different one to your visitors. Google sees this as misleading and can penalize your site for it.

- Be careful with high-traffic pages. If you’re testing a page that brings in a lot of visitors from search, double-check your setup. A mistake here can have a bigger impact on your traffic.

Turn your tests to results

A/B testing is effective when you know when to use it, what to test, and how to run it properly. By following the steps, tools, and examples we covered, you can make smarter choices that improve conversions and create a better experience for your customers.

To get started, make sure your website is easy to update and built for testing. We offer a user-friendly website builder, reliable hosting, SEO services, and professional business email—everything you need to create, test, and optimize your online presence. With the right tools in place, your A/B tests can lead to real, measurable growth.

Frequently asked questions

A/B testing is good for improving the parts of your website or campaigns that affect conversions. Businesses use it to get more sales, sign-ups, or form completions by finding out which version of a page, button, or message works better.

It’s also good for learning what your audience prefers and improving user experience. By testing layouts, headlines, or navigation, you can see what makes it easier for people to engage, stay longer, and act.

If you’re just starting out, it’s best to use a tool that’s easy to set up and doesn’t require technical skills. VWO has beginner-friendly plans, and Crazy Egg is also a good option since it’s straightforward and includes extra insights like heat maps.

Yes, you’ll need a steady flow of visitors for A/B testing to work. If only a few people see your pages each week, the results won’t be meaningful.

Most tests need at least a few hundred conversions or several thousand visits before the results can be trusted. If your site doesn’t have that kind of traffic yet, focus on user feedback, surveys, or recordings to learn what’s working and what to improve.